SSD Reliability in 2014

Written by Clifford

Introduction

The topic of solid state drives (SSDs) is important because applications want increased speed. But earlier generations of SSD had some issues with lifespan. This type of storage has limited life due to the physical properties of semiconductors. But the industry pushed ahead, finding technical solutions to resolve the issue. Costs were also driven down, as the price of SSD was at first prohibitive.

Now, those who want the fast performance of SSD, without a moving disk to slow things down, can have it. They do not have to worry about how long their system will last. SSDs and HDDs now both have a similar expected lifespan. Here we explain the technical details of that so that you can judge for yourself.

SSD technology overview

Dr. An Wang invented the magnetic disk (non-volatile memory) in 1955. It was a technological breakthrough. He licensed it to IBM for their mainframes. For the first time ever, technology existed that would allow a computer to hold onto its information when powered off. Hard disk drives (HDDs), based on this principle, became the backbone of the computer industry for 60 years. HDD replaced paper punch cards. But the speed of magnetic HDDs have an inherent limit determined by the disk controller. Modern controllers operate at breathtaking speed, but they cannot operate as fast as, say, the speed of light.

Engineers working at Bell Laboratories left there in 1957 to start what became Intel. They took the transistor and created the semiconductor. This, like Wang’s magnetic disks, retained its information when powered off. However, it was much more compact, enabling much faster speeds in opening or closing a circuit. Semiconductors were engineered into CPUs to power computers. But engineers realized that semiconductors too could function as storage – if only they did not cost so much. In theory you could store more on them than just programs.

This has become workable over the past few years, as the prices of such devices have fallen a lot.

To understand SSD lifespan and where you might deploy them, it is necessary first to understand how they work.

How SSDs work

Each memory cell in SSD storage is a die that can contain one or more bits. Its physical properties are such that each die can handle about 100,000 writes before it wears out.

Multiple-level memory cells (MLC) increase the storage capacity over single level cells (SLC). They do this by allowing more than one bit per cell, but this is what decreases their expected lifespan. The expected lifespan of an MLC cell is 10,000 writes. When manufactures build flash memory, each die (also known as a memory cell) is tested to determine its natural raw error rate. The bit error rate (BER) of an SSD is absolutely known and managed by the flash driver controller and the error correction code. To extend the life of the disk, the controller uses wear leveling to distribute writes evenly across the devices. The term given to the flash storage controller’s ability to correct for these errors is ‘uncorrectable bit error ratio’ (UBER).

Hard disks and solid storage drives use this type of error correction code. So does any kind of data transmission, including radios and TV transmission. This ensures reliability as they transfer data. For a data file, this is a checksum or MD5 hash (signature). For disk drives, it is a parity bit or bits. Disk drives calculate parity bits as they write data. They record those parity bits as error correction codes (ECC) on the disk drive, along with the raw data.

Kingston gives an interesting and clear explanation of the inherent properties of flash storage and the concept of the bit error rate. They say that NAND flash memory has a ‘finite life expectancy and a naturally occurring error rate’. This is because of the physical properties of semiconductors.

When the disk controller reads a block of data, it reads its error correction code and compares that with the ECC algorithm. If the result does not match the data, that block is marked as bad. RAID and other replication schemes are then used to retrieve the correct data from a backup location. ECC algorithms can also, under certain circumstances, correct the data from the ECC code, when the actual data is lost.

The principles of ECC are the same for HDD and SDD. The difference lies in the data dispersal algorithm: since SDD data cannot be updated; it can only be erased. This means that the controller has to keep track of which version of the data is current, as there will be multiple copies. When a block of memory cells is full of stale data, the controller erases the data and reuses the block.

Reliability of SSDs over time

What can we say about the reliability of SSD today? Five years ago, the technology was not as common as today, because it cost too much. Tech writers then were writing articles with headlines like ‘How SSDs can hose your data’. This was the reviewers intimating that such devices were undependable. At that time, flash drives were also thought to be incapable of sustaining repeat power outages.

So what has changed since then to improve the reliability of SDD? The key thing is that there have been improvements to error correction codes. Furthermore, better capacitors have been introduced to store information in volatile memory. This gives the time for the cache to be written to solid state storage in the case of a power failure.

Current situation

There is not much difference between the different types of SSDs in terms of reliability and speed. They all operate with pretty much the same physical characteristics. The only difference lies in how many drives you want to plug into the array, to increase their capacity. Below we provide a list of some current SSD models, their expected lifespan, and whether they are for the low- or high-end market (i.e. PC or enterprise).

But first, Samsung has addressed the issue of memory fatigue. It says the SM843T Data Center Series has ‘extreme write endurance’. To address the power-failure issue, their drive includes tantalum capacitors. Tantalum capacitors are more expensive than regular capacitors. A capacitor holds an electric charge over a short period of time when its electricity is off. So, in the case of a power failure, the drive has time to write cache to permanent, non-volatile NAND storage.

Samsung says their SM843 drive has an UBER of 1 per 10^17 or 10E-7. That number decreases in a linear fashion over time. This means the BER of memory cells increases over time in a linear (i.e. steady and predictable) manner. The ECC algorithm built into the controller knows this. As such it changes its data dispersal algorithm over time to ensure reliable operations.

Samsung says that the SM843 drive has a two million hour mean time before failure (MTBF). This does not mean that the device will last two million hours. The number is a function of the bit error write times. This is the greatest throughput (write speed) at which the device will operate.

Seagate, in a surprising statement, says that manufacturers are overreaching. Presumably they are not talking about themselves. They are overreaching, Seagate says, in their efforts to pack more storage into the same space and to reduce costs. Below we cite some test observations and warranty data from different manufacturers. None of the tests show poor results, except perhaps the Samsung 840. It uses triple-bit dies instead of a double-bit. Still, it is shocking for Seagate to write that ‘while new generations of NAND flash memory are being developed on smaller silicon geometries to reach higher densities and reduce the cost of flash memory, their overall endurance is dropping at a very high rate’. We would hope that is not the case for the average data center. Let’s look at some facts.

Some sample models and their expected lifespans

Actual observed lifetime in the field would be the best way to measure SDD expected lifetime. But this would be difficult. The swappable nature of these devices that lets you replace failed components with new ones in a disk array. Perhaps a reliable way to estimate SDD lifespan would be to look at the manufacturer’s warranty. Below are some hard numbers on that. Violin’s CEO makes the point that their SDDs match the 3 to 5 year depreciation schedule of HDDs. So they should have a comparable lifespan. (Depreciation is an accounting system used in the USA. It relates the expected lifespan of a device to the number of years a company can charge that item to expenses and so reduce their taxes.)

The table below shows the SDD model. The columns show whether the model design is for the enterprise or mid-level market. They also show the warranty in years, if available. Finally, they comment on the technology used to ensure data integrity and recoverability.

| Model | Market | Warranty or other lifetime information | Data integrity |

| Seagate®

SandForce SF2600 and SF2500 |

Enterprise | 5 years because of good block management and wear levelling | BCH ECC algorithm and RAISE (Redundant Array of Independent Silicon Elements) up to 55 bits correctable per 512-byte sector |

| Violin Concerto 7000 All Flash Array | Enterprise | Matches 3 to 5 year life depreciation cycle of HDD disks | Controllers deployed as high availability pairs |

| RAIDDrive GS | Mid-market | (no data) | RCC and RAID |

| HGST (Western Digital)

2200GB, 1650GB & 1100GB |

Enterprise | 5 year warranty | ECC and RAID |

| IBM FlashSystem V840 | Enterprise | 3 year warranty | Variable Stripe RAID and two-dimensional flash RAID |

Expected lifetime study

Hardware.info ran a two-year field test on a Samsung 840 SSD that ended last year. This is a low-priced drive suitable for consumer laptops. Samsung offered 1,000 guaranteed write operations on triple memory cells. But in actual practice they supported three times as much. Hardware.info also unplugged the power at certain intervals, and left the power off. This tested whether memory cells might lose data after 1,000 operations. They found there to be no problem with that. The first uncorrectable write errors occurred after 3,000 operations. At 4,000 operations they said that the drive was ‘clinically dead’, as write errors reached 58,000.

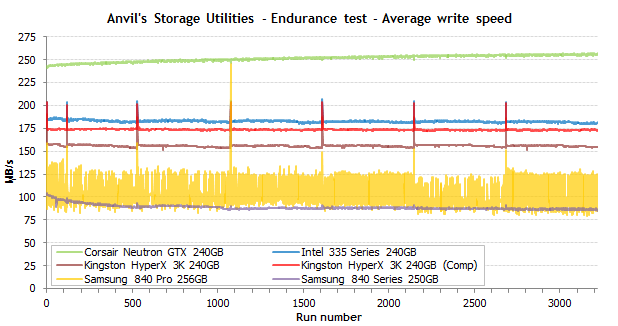

How long a drive lasts is also related to how much you use it. That was the point of another study, which sought to wear out the devices faster. They completed more operations in a shorter time than one would in practice. This study, by the Tech Report, was also geared toward consumer, PC grade hardware. They tested the Corsair Neutron GTX 240GB, Intel 335 Series 240GB, Kingston HyperX 3K 240GB, and Samsung 840 Pro 256GB. These are all based on two-bit MLC flash. They also tested the Samsung 840 Series 250G, which has a 3 bit TLC flash.

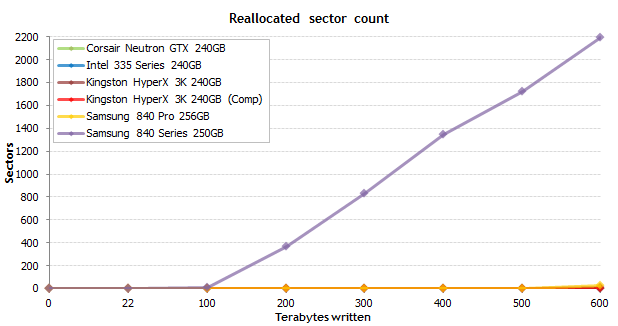

At the end of the 600 TB test, the testers ran a checksum over the files they copied and there were no reported errors. The Samsung at this point had marked one sector as reallocated, meaning it was bad and unavailable for future use. At that time, Samsung started reallocating sectors in a linear fashion. These increased with usage, as shown in the graph below. The graph shows it wearing out over time, unlike the other models. The difference is between the 2 and 3 bit memory cells, which wear out faster by cramming more bits onto a smaller chip (to reduce costs).

The testers took snapshots of the results at the 200 TB and 300 TB benchmark on their way to a 1 petabyte snapshot. They have not yet documented those results. As you can see, any memory cell degradation measured at the 300 TB checkpoint has little noticeable effect on performance for the different drives tested.

Conclusion

Given the information outlined above, we can see that SSDs are reliable for enterprise applications. Their main use, however, is in cell phones and tablets. For example, this is what iPads and iPhones use. For PCs, you can buy an all SSD laptop, like the Samsung 840 SSD discussed above. But it is more common for the manufacturer to store the recovery disk on SSD so the user can recover their PC when their hard drive has a critical fault. Users can copy their operating system to it, to make their computer run faster.

For enterprise, IBM says the answer to the question whether to use all-SSD is to consider the total cost of ownership (TCO), the dollars per terabyte, and dollars per IOP. So get out your pen and paper. Then call your salesman for a quote. (Prices for hardware, electricity, negotiated discounts, and facilities rental vary between locations.)

When calculating TCO, flash storage vendors emphasize that there is more to consider than the initial upfront cash expenditure or lease payments. SSD uses less electricity and reduces floor space. This further reduces electricity costs for air conditioning. Moreover, they say that the superior performance of SSD over HDD means that a company can reduce licensing fees. For instance, if one application server can do the work of three, using high-speed PCI SSD storage, then there is no need to pay Oracle three times over for Weblogic.

If the cost is more or less the same, use SSD. SSD is much faster than HDD. This means it will boost customer satisfaction due to the increased performance of apps and websites.

On the reliability issue, that is a more complex question. Much of the negative literature on the internet relates to laptops’ SSDs. The literature does not relate to commercial-grade equipment. Also much of the literature dates from 2009 and earlier, yet it shows up high in Google, so people might well be reading old reports. The technology is in fact better than those critics say, as testing reveals. Wear leveling has extended the life of memory cells. High capacity capacitors have reduced the risk of power loss due to a power failure.

If the manufacturer says their warranty is three to five years, then they will replace their product for free if problems arise during that period. So the point about endurance turns out to be a moot one.

Leave a Comment